Breaking down and fixing etcd cluster

etcd is a fast, reliable and fault-tolerant key-value database. It is at the heart of Kubernetes and is an integral part of its control-plane. It is quite important to have the experience to back up and restore the operability of both individual nodes and the whole entire etcd cluster.

In the previous article, we looked in detail at regenerating SSL-certificates and static-manifests for Kubernetes, as well as issues related to restoring the operability of its control-plane. This article will be fully devoted to restoring an etcd-cluster.

First and foremost I should make a reservation that we will only consider a specific case, when etcd is deployed and used directly in part of Kubernetes. The examples given in this article assume that your etcd-cluster is deployed using static manifests and runs inside containers.

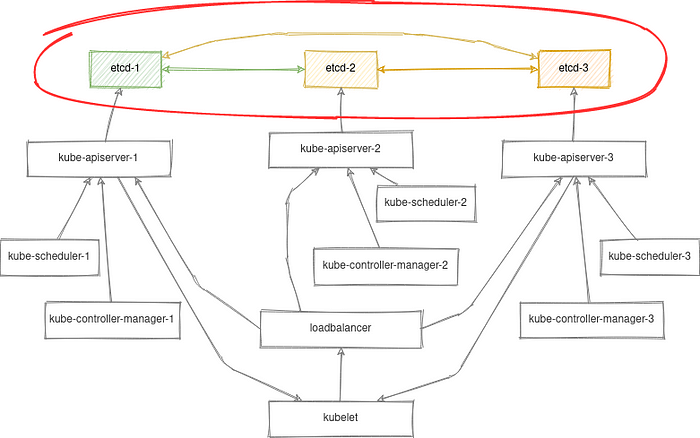

For clarity, let’s take the stacked control-plane nodes scheme from the previous article:

The commands suggested below can be executed using kubectl as well, but in our case, we’ll try to abstract from the Kubernetes control-plane and consider the options for managing a containerized etcd-cluster using crictl locally.

This skill will also help to fix etcd in even case if the Kubernetes API is not working.

Preparation

Therefore, the first thing we’ll do is login over ssh on one of the master nodes and find our etcd container:

CONTAINER_ID=$(crictl ps -a --label io.kubernetes.container.name=etcd --label io.kubernetes.pod.namespace=kube-system | awk 'NR>1{r=$1} $0~/Running/{exit} END{print r}')Let’s also set an alias to not pass the certificates options in each our command:

alias etcdctl='crictl exec "$CONTAINER_ID" etcdctl --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key --cacert /etc/kubernetes/pki/etcd/ca.crt'The above commands are temporary. You’ll need to run them each time before operating with etcd. Of course, you can add them to .bashrc for your convenience. However, this is already beyond the scope of this article.

If something has gonna wrong and you can’t exec into a running container, look at the etcd logs:

crictl logs "$CONTAINER_ID"And also make sure you have a static manifest and all certificates in case the container is not even existing. Sometimes reading the kubelet logs can also be very useful.

Checking cluster status

It is simple at this point:

# etcdctl member list -w table

+------------------+---------+-------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------+---------------------------+---------------------------+------------+

| 409dce3eb8a3c713 | started | node1 | https://10.20.30.101:2380 | https://10.20.30.101:2379 | false |

| 74a6552ccfc541e5 | started | node2 | https://10.20.30.102:2380 | https://10.20.30.102:2379 | false |

| d70c1c10cb4db26c | started | node3 | https://10.20.30.103:2380 | https://10.20.30.103:2379 | false |

+------------------+---------+-------+---------------------------+---------------------------+------------+Each etcd instance knows everything about each. Information about members is stored inside etcd itself and therefore any change in it will also update the other instances of the cluster.

Important note, the member list command only shows the configuration status, but it’s not the status of a specific instance. To check the status of instances there is an endpoint status command. However, it requires explicitly specifying all endpoints of the cluster to check.

ENDPOINTS=$(etcdctl member list | grep -o '[^ ]\+:2379' | paste -s -d,)

etcdctl endpoint status --endpoints=$ENDPOINTS -w tableIn case if any of the endpoints is inaccessible, you’ll see the following error:

Failed to get the status of endpoint https://10.20.30.103:2379 (context deadline exceeded)Removing a faulty node

Sometimes it happens that one of the nodes became inoperable. And you need to restore the operability of the etcd cluster, so what should you do?

First, you need to remove the failed member:

etcdctl member remove d70c1c10cb4db26cBefore continue, let’s make sure that etcd container is no longer running on the failed node, and that the node does not contain any data anymore:

rm -rf /etc/kubernetes/manifests/etcd.yaml /var/lib/etcd/

crictl rm "$CONTAINER_ID"The commands above will remove the static-pod for etcd and data-directory /var/lib/etcd on the node.

Of course, you can also use the kubeadm reset command as an alternative. However, it also will remove all Kubernetes-related resources and certificates from this node.

Adding a new node

Now we have two ways:

In the first case, we can simply add a new control-plane node using the standard kubeadm join mechanism:

kubeadm init phase upload-certs --upload-certs

kubeadm token create --print-join-command --certificate-key <certificate_key> The above commands will generate a command to join a new control-plane node in Kubernetes. This case is described in the official Kubernetes documentation in detail and does not require clarification.

This option is most convenient when you deploy a new node from scratch or after executing kubeadm reset command.

The second option is more accurate, since it allows you to consider and make changes that are necessary only for etcd. Oher containers on the node will not be affected.

First, let’s make sure that our node has a valid CA certificate for etcd:

/etc/kubernetes/pki/etcd/ca.{key,crt}If it is missing, copy it from other nodes of your cluster. Now let’s generate the rest of the certificates for our node:

kubeadm init phase certs etcd-healthcheck-client

kubeadm init phase certs etcd-peer

kubeadm init phase certs etcd-serverand perform the join to the cluster:

kubeadm join phase control-plane-join etcd --control-planeFor understanding, the above command will perform the following steps:

- Add a new member to the existing etcd cluster:

etcdctl member add node3 --endpoints=https://10.20.30.101:2380,https://10.20.30.102:2379 --peer-urls=https://10.20.30.103:2380 - Generate a new static-manifest for etcd

/etc/kubernetes/manifests/etcd.yamlwith the options:--initial-cluster-state=existing

--initial-cluster=node1=https://10.20.30.101:2380,node2=https://10.20.30.102:2380,node3=https://10.20.30.103:2380

these options will allow our node to be automatically added to the existing etcd-cluster.

Creating a snapshot

Now let’s take a look at creating and restoring etcd from a backup.

You can create a backup quite simply by executing the following command on any of your etcd nodes:

etcdctl snapshot save /var/lib/etcd/snap1.dbNote that I use /var/lib/etcd intentionally since this directory is already passed through into the etcd container (you can find this in the static manifest file /etc/kubernetes/manifests/etcd.yaml)

After executing this command you’ll find a snapshot with your data along the specified path. Let’s save it in a safe place and take a look at the restoring from a backup procedure.

Restoring from a snapshot

Now let’s take a look at a case when everything get broken and we eventually needed to restore the cluster from a backup.

We have a snapshot file snap1.db created on the previous step. Now, let’s completely remove all the etcd static-pods and the data from all our nodes:

rm -rf /etc/kubernetes/manifests/etcd.yaml /var/lib/etcd/member/

crictl rm "$CONTAINER_ID"We now have two ways again:

The first option is to create one node etcd cluster and join the rest nodes to it, according to the procedure described above.

kubeadm init phase etcd localthis command will generate static-manifest for etcd with options:

--initial-cluster-state=new

--initial-cluster=node1=https://10.20.30.101:2380this way we’ll get a virgin clean etcd-cluster on one node.

# etcdctl member list -w table

+------------------+---------+-------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------+---------------------------+---------------------------+------------+

| 1afbe05ae8b5fbbe | started | node1 | https://10.20.30.101:2380 | https://10.20.30.101:2379 | false |

+------------------+---------+-------+---------------------------+---------------------------+------------+Let’s restore the backup on the first node:

etcdctl snapshot restore /var/lib/etcd/snap1.db \

--data-dir=/var/lib/etcd/new

--name=node1 \

--initial-advertise-peer-urls=https://10.20.30.101:2380 \

--initial-cluster=node1=https://10.20.30.101:2380

mv /var/lib/etcd/member /var/lib/etcd/member.old

mv /var/lib/etcd/new/member /var/lib/etcd/member

crictl rm "$CONTAINER_ID"

rm -rf /var/lib/etcd/member.old/ /var/lib/etcd/new/On the rest nodes, we’ll run command to join the cluster:

kubeadm join phase control-plane-join etcd --control-planeThe second option is to restore the backup on all nodes of the cluster at once. To do this, copy the snapshot file to all nodes, and perform the recovery procedure as described above. But in this case, we need to specify all the nodes of our cluster in the options to etcdctl command, for example:

for node1:

etcdctl snapshot restore /var/lib/etcd/snap1.db \

--data-dir=/var/lib/etcd/new \

--name=node1 \

--initial-advertise-peer-urls=https://10.20.30.101:2380 \

--initial-cluster=node1=https://10.20.30.101:2380,node2=https://10.20.30.102:2380,node3=https://10.20.30.103:2380 for node2:

etcdctl snapshot restore /var/lib/etcd/snap1.db \

--data-dir=/var/lib/etcd/new \

--name=node2 \

--initial-advertise-peer-urls=https://10.20.30.102:2380 \

--initial-cluster=node1=https://10.20.30.101:2380,node2=https://10.20.30.102:2380,node3=https://10.20.30.103:2380for node3:

etcdctl snapshot restore /var/lib/etcd/snap1.db \

--data-dir=/var/lib/etcd/new \

--name=node3 \

--initial-advertise-peer-urls=https://10.20.30.103:2380 \

--initial-cluster=node1=https://10.20.30.101:2380,node2=https://10.20.30.102:2380,node3=https://10.20.30.103:2380Loss of quorum

Sometimes it happens that you have lost most of the nodes from the cluster and etcd went into an inoperative state. You’ll not be able to remove or add new members to the cluster and create snapshots as well.

There is a way out of this situation. You just need to edit the static manifest file and add the --force-new-cluster key to etcd:

/etc/kubernetes/manifests/etcd.yamlafter that the etcd instance will restart in a cluster with a single instance:

# etcdctl member list -w table

+------------------+---------+-------+---------------------------+---------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+-------+---------------------------+---------------------------+------------+

| 1afbe05ae8b5fbbe | started | node1 | https://10.20.30.101:2380 | https://10.20.30.101:2379 | false |

+------------------+---------+-------+---------------------------+---------------------------+------------+Now you need to clean up and add the rest nodes to the cluster as described above. Please don’t forget to remove the --force-new-cluster key after these manipulations ;-)