Optimizing the Software development process for continuous integration and flow of work

This article is part of a series of articles related to how the software development industry seem to have some difficulties adopting research findings. You can read the introductory article: The Obsession with Dev-Fu and process puritanism (if you haven’t already) to get a bit of context.

In this article I will dive into a development process, I find greatly optimizes for continuous integration of work and a frictionless flow of value. The reasons why these attributes are important is outlined in detail in the introductory article.

A somewhat short recap of the importance is provided here. (if you have read the introductory article, jump to next header:-))

In State Of DevOps Report 2016 you can read:

“We have found that having branches or forks with very short lifetimes (less than a day) before being merged into trunk, and less than three active branches in total, are important aspects of continuous delivery, and all contribute to higher performance. So does merging code into trunk or master on a daily basis.”

In later work, this finding has been repeatedly verified and the case is stronger than ever. There seems to be no ambiguity.

Trunk Based Development (TBD) and continuous integration improves software delivery performance.

And it is not just that it has a positive impact, it is an impact on the level of Monitoring, Deployment Automation and Code Maintainability.

Unless branches are kept at a minimum and are very short-lived, some improvements to software delivery performance remain unattainable.

And just to be clear. The delivery performance improvements shown in State of DevOps are not small… They are double-digit multipliers of improvement.

I believe continuous integration and frictionless flow of work, are the most important measures to optimize for when designing a process and workflow. Optimizing for these, seem to make everything else fall into place.

In this article I will dive into the software development process I have found to do this best. Note that this article is focused on the software developer’s day-to-day work, not the surrounding process. I.e. whether they use Scrum, Safe, Shape Up, some type of waterfall or something completely different, is (“to a large degree”) orthogonal to what is described here.

I have also left out what QA mechanisms are used, degree of automated tests, ratio of manual vs. automated, unit vs. integration, as I don’t see much difference between doing TBD w. NBR or Feature Branches with Pull Requests for that part. Of course that are som differences, especially if comparing poly with mono repo, but I will leave that out of these articles, as the word count is already daunting…

With the above deemed out of scope, let’s jump into it.

TBD w. NBR

I find the least friction, best pace and CI/CD value from doing Trunk based development in a “mono”-repo. Features are implemented and matured using feature toggles.

Reviews are non-blocking, enabling quick feedback from both automated and manual tests.

I believe the process has a lot of subtle nudging effects that cumulate to induce people to use simpler and more incremental solution approaches.

The “frequent deploy” to production is assumed to be implicit in the goals of this “methodology” (or combinations of methodologies). But the frequency of this depends on many factors and I do not see a specific deploy frequency to be a prerequisite of getting a lot of the value out of the “TBD w. NBR” model.

So how does it actually work?

First of all it should not be viewed as purist. There are always exceptions… (as we know painfully well… even well tested code have exceptions…). Like everything interesting, it depends.

It is “80/20” rule oriented. That is, have a dominant default behavior/process, but be open to handle deviations. Let the default behavior be chosen by evaluating what commonly provides the most value, with the least cost or friction. And don’t let the outliers or anomalies define a costly or tedious process, “just in case”.

Trunk Based Development (TBD)

TBD is the idea that everyone integrates their changes to master as often as possible.

The focus in TBD is on Continuous Integration, i.e. getting everyone’s work integrated with everyone else’s. Quickly. Some teams find it useful to have very short-lived feature branches + pull requests for this. I will address this later and and, in this section, describe the process of “committing straight to master for close to all changes”.

TBD+CD does not equate Continuous Delivery to production. I.e. it is not a requirement that all commits immediately gets deployed to production. Nor is it required that master can, unexpectedly, be deployed at any time. I have encountered this assumption many times, and therefore would like to get that out of the way.

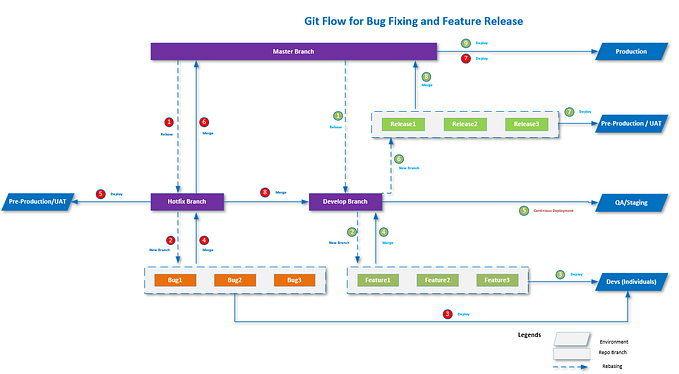

TBD allows release branches (1 at a time). I would actually recommend doing release branches, unless you really are doing continuous delivery to production for each commit. (And good for you!:-))

It is also fine to have spike-branches and the occasional “break-all-refactoring”-branch. HOWEVER branches should be kept short-lived and branching should be the exception. It should be a rare event in the case of the refactoring branch. The spike branches can basically be throw-away — never to be merged from again. 90%+ of commits should happen on master. Not in branches.

I have experienced that having ad hoc/part time developers (e.g. student workers being in 1 or 2 days a week) work in branches can be advantages. The reason for this is that if a “part-time” developer breaks something for others, they are often not present to help fix it quickly — when it is discovered. Furthermore pre-code-integration review can be more relevant in these cases because there is more learning and coaching happening. The latter also being a possible case for junior developers, however I think, in that case, much more can be gained by pair programming or performing in-person code review.

It is allowed to break master/trunk

It is allowed to break master/trunk — however you will be embarrassed and highly motivated to avoid it… And when I say allowed, I mean people can make a mistake. It is not OK to be negligent.

There is a big difference between being repeatedly blocked and delayed by process and occasionally being blocked or delayed by someone making a mistake. In my view, choosing between the two is a no-brainer.

Feature Toggles

When I write feature toggles here, it is important to note a few things.

One is that feature toggles can also easily be used when doing non-TBD. It is, however, a much more critical practice in TBD. In TBD you cannot pretend you do not need feature toggles.

Feature Branching is a poor man’s modular architecture.

Instead of building systems with the ability to

easy swap in and out features at runtime/deploytime

they couple themselves to the source control

providing this mechanism through manual merging— Dan Bodart

In TBD we do not have the luxury of being able to “ignore” the need to turn off a feature. It needs to be knitted into the fabric of how we develop stuff, because we WILL have Work-In-Progress (WIP) code deployed to production. We thus need to safely be able to turn it off (and on).

Being able to having a feature toggled differently in different environments, enables us to have them toggled on as infants and toddlers in a test environment. They can be toggled on in demo or pre-prod environment when the features are a bit more mature, and in production we can enable it for specific users, letting the feature evolve from teenager to (hopefully) well-adjusted adults. (Note: it is not a requirement for all features to be toggleable on a user-specific level.)

Ideally feature toggles are independent, but usually the real world is not ideal. In theory, theory and practice are the same. In practice, they are not, as Einstein said. So evaluate what makes sense and don’t be religious about it.

If the code is simpler, by one feature toggled functionality depending on another, that might be good tradeoff. I.e. increase in dependencies for reduction of complexity.

Feature toggling doesn’t need to be anything advanced or complicated. I would actually advice against that. Some features can be extremely complicated to turn on/off during runtime. If you don’t need that ability, don’t take on the complexity. The challenging, and important, thing when using feature toggles, is not how you turn features off and on. It is how you build stuff incrementally and togglable.

Non-blocking reviews

When I moved a team to TBD some years ago, we had a lot of discussions on how we would do reviews. One of the primary concerns, going away from feature branches, was the absence of Pull Requests as a means of review.

Personally, I like doing reviews, and I find there are very important gains to be had from reviews. If all participate in it, you get a shared understanding and sense of what is going on, you catch the occasional bug, misunderstanding or straight-up fuckup. There is also a sense of “someone is going to read what I am writing now”-sensibility induced.

Code reviews also appear to have a measurable positive effect on early bug detection, code quality and knowledge sharing.

What I really don’t want from a code review process, is flow of work being blocked, delayed or interrupted. This would create a nudging effect towards increased batch sizes and feedback cycle times. Looking into some of the findings linked above, the improvement in quality does not rely on “pre-integration” reviews. (note improvement of reviewing before deployment — not before integration).

It also seems that more review comments => better code and a way to get more comments, is to get more people reviewing the code. However, if you have blocking reviews, then you must balance the gains from the blocking review, with the cost of interruptions and delay of code and change integration. Non-blocking reviews enables the ability to keep reviews “open for comments”. And let the reviewer provide these when it best fits.

There is also a less visible, but in my view very real, nudging effect of letting the review act as a safety net or “responsibility transfer” from one developer to another. This is the case regardless of whether it is done by pull-requests or in-person-review-before-push.

Your change broke X — Someone

Well ‘Reviewer’ reviewed and approved it — Committer

This exchange has a few undesirable effects.

First the “committer” is lulled into a false sense of safety that the reviewer will “QA it”. This can risk nudging the committer into not testing the changes thoroughly, cleaning it up, ensuring everything is “by the book”, because any transgressions or mistakes will be caught in the review. It actually risks inducing a reduced sense of responsibility and ownership of changes.

Second, the reviewer gets the daunting (/impossible) or potentially extremely time-consuming task of ensuring that, on top of “everything being by the book”, the code change did not break anything, no tests are missing and appropriate quality assurance done.

Even if there is a Continuous Delivery environment or extremely easy way of getting something running that enables (integration) testing the change, the reviewer should still understand, in depth, the change being made, the potential side effects etc. If the reviewer feel responsibility and ownership being transferred, it can result in reviews that are overly time consuming and delaying.

In my experience only somewhere around 10% of commits and changes are actually worth a detailed review effort. This can off course vary very much from place to place. But for most of the bread and butter code most people churn out, I believe it to be the case that it is much better spending the bulk of your effort on the few important reviews, instead of spending your energy evenly distributed on all of them. (Research shows that reviewing more lines of code per hour, greatly reduces Defect Removal Effectiveness. I.e. what we get out of the reviews. So spending the review time wisely, can impact quality a lot. (The Impact of Design and Code Reviews on Software Quality: An Empirical Study Based on PSP Data — 2009)

Typically you can identify the important ones very quickly. Basically by skimming the commit(s), and identifying “weird” things or some things to spend a bit more time on. 80% of reviews or more are done with very little effort or complications — and letting them block would be a waste and introduce unwarranted delays for getting changes integrated and available for feedback and test.

Even with a good culture of reviewing pull-requests quickly, in NBR there is still the added speed of feedback by getting code integrated and out into test. Before reviews are finished or have even started. And if reviewers are in meetings, off sick, really busy with a bug or other tackles, they do not need to be interrupted, disturbed or asked to contextswitch, to ensure changes can get integrated. (or alternatively, see flow of work being blocked)

For less seasoned programmers, reviewing is a great opportunity to get to read a lot of code — getting exposed to unknown-unknowns and ways of doing things. Again, this is a gain that should be had without a blocking workflow.

It is possible to have non-blocking reviews with pair programming or by going through commits or features after they have been integrated. These are very good practices. But there are now tools, that allow for Non-blocking / Post Code Integration reviews. Unfortunately not as of yet mentioned on https://trunkbaseddevelopment.com/continuous-review/, but I really hope to see more tools supporting NBR or Post-code-integration reviews.

Upsource by JetBrains (https://www.jetbrains.com/upsource/) is one such tool (no affiliation). Even though it is very simple, it supports a very simple workflow which supports TBD w. NBR very well. I have used it for a couple of years on different teams.

You can set up Upsource to create reviews for each commit, add commits to an existing review (if tagged with a ticket/task number, e.g. by # prefix). You can setup how many reviewers should be assigned. And various other features.

It supports having a dashboard showing pending reviews, raised concerns and similar. There are a few extra features that would be nice to have on the dashboard — and there are the signs that they may be bloating elsewhere. But for now it is a very good tool for doing non-blocking reviews — and keep track of them. Many may be wary of reviewing something, that might change later (i.e. reviewing a commit, with a bug that has already been fixed in a later commit). However, I think the gains from CI, spotting things early and the awareness of WIP greatly outweighs the occasional redundant review. And I would rather a ball is caught twice, than having it fall to the ground.

It should be noted that there is very much a cultural task of ensuring that reviews do not heap up. I think visualizations will help with this. The blocking when using pull requests has a built-in nudge (/carrot) for the committer to nag (/whip) reviewers. This is not present when using Post CI reviews. So this is something teams should be aware of and focus on.

If there are requirements to have code reviewed before it goes to preprod or production, that is a hammer to use.

And shame is also somewhat efficient.

Continuous Delivery to Test

Continuous delivery, of all integrated code, to a test environment, is extremely useful.

When people talk about continuous delivery, there is risk of getting hung up on the term, as continuous delivery to production. However, a majority of the benefits are already gained, by having continuous delivery to a test environment. And having that in place, typically means that deployment to production also become routine.

If continuous delivery to test, is used correctly it can reduce feedback latency from “non-coders”, fail-(and-fix)-fast if something gets broken, easy review of “the feature” (instead of just the code, a reviewer can look it up and try it out in test).

Finally, it forces the developers to keep a working version of the application/system/integrations running, ensuring mocks, stubs and always-available test-data.

I have seen so many times people “prioritizing/optimizing” the test environment setup away, postponing it to some QA phase. Every single time a QA phase approaches, and people start setting up the test environment, everything explodes. Dependencies have been overlooked. Mocks and stubs are missing. No “backdoors” or feature toggles for dummies or similar have been introduced. Finally, the other feature on which “your” feature depended, got delayed and because no decoupling/mock had been introduced, your feature is not able to be tested. I can’t count how many times a hardcoded piece of json or similar dummy data in an integration-endpoint have saved the day.

I have even seen json-documents on disk being used in production (i.e. what had been created for test) as a substitute for the actual endpoint of an external service. The external service kept getting delayed and having a daily updated file was fine in the startup phase.

Having a test environment always running, reduces the friction for trying something out or showing something to another team member, colleague or end-user. So quickly investigating a hunch, bug or just how the system works, requires much less effort, and is therefore more likely to occur. It enables some of the “immediate feedback” described in “inventing on principle” which is one of the core benefits of CI/CD.

Mono or corpulent-repo

I like having multiple related services in a single repository, enabling people to check everything out in a “consistent” state. The CI/CD process is also simplified. HOWEVER — I do not subscribe to the view that mono-repo requires an entire company to have only a single repository. In my view it is about making the repositories as big as make sense, and not, as I have the impression many people feel they need to; make them as small and “single-service” as possible. Decoupling services by putting them in different repositories introduce a lot of extra complexity, which should be worth it. You also miss out on a lot of the opportunities available in a corpulent/mono-repo.

For some reason people have become obsessed with having as small repositories as possible. There appear to be various reasons for this. Some people think that you can only have one buildable/deployable/container per repository — and thus conclude that having multiple services is not possible. Or at least, if stuff is in same repository, it should be deployed at the same time.

Another view is that having multiple services or functionalities in one repository equates to building a monolith (which implicitly is viewed as a very bad practice). Or if not a VCS-monolith, it will risk tempting developers to cross boundaries between services or responsibilities.( Downside 1: Tight coupling and OSS )

Many also mention the size of the repo and fear of conflicts.

As I mentioned in the beginning, I would not spend this “chapter” to criticize, I won’t address the concerns above here. Some of them will be addressed in the “sister article”.

People may provide several other reasons — but basically I don’t see the advantages of poly- or nano-repo anywhere near outweighing the advantages of having a corpulent-repo. Phrased differently, I see no need to strive for smaller repositories. Rather in cases of doubt, err on the side of bigger repository.

I do not say here, there cannot be services that live in their own repository. There certainly can be. And very good reasons for this exist. However, I would steer clear of it as a default decision, and instead default to having code in same repository or a few big repositories. And then handle the “single-service-repository” as an exception, when the actual need arise.

Having “all” related code in a single repo brings a lot of advantages. Cross-cutting changes can be done in a single atomic commit, or associated commits (more in next section about this).

There are also the advantages related to being able to do simple associations to libraries or shared API models/specifications. Instead of requiring people to update a shared package, publish it, update its reference and furthermore not be able to test it during the changes, a much simpler and “quick feedback” workflow can be used. Simply do the change and if you break anything, you will know very quickly and you will get you changes out very quickly. It is simpler to debug shared packages, and simpler to apply a fix and get it out all the places it is used.

Corpulent-repo enables quicker iterations, better/simpler consistency of work and simpler atomic cross cutting changes, as compared to what it would be in poly- or micro-repo. It does come with some major consequences though. Changes to shared code will much quicker be able to break other components or services. However this cost, I actually see as a benefit — as it encourages to implement changes in a better way and fixing the other components can actually be done as part of the change. But as everything else. It depends. Maybe versioned packages are better in your specific case, but most often I find that is not the case for 80+% of the shared code in a system landscape.

There are cases where packages are shared by a lot of different services, where versioning are more important — and backwards compatibility essential. But (broadly speaking) for the majority of service/application couplings, the coupling is 1-to-1, or 1-to-very-few. There is a somewhat related finding in a study, by Søren Lauesen, of a quite big project using SOA. Of around 1600 services, around 100 were reused once, and only 20 were reused several times.

The promises were that you could define the services up front in a logical and business-oriented fashion and reuse them in many projects. This wasn’t the case in practice. The integration platform got 1,600 services. Around 100 of them were reused once and 20 of them several times. The rest were essentially point-to-point connections. Further, performance was low and availability vulnerable as a system would seem down when one of the systems it depended on was down. To provide sufficient availability, systems replicated data that belonged to other systems.

- Damage and Damage Causes in large government IT Projects — 4. Debt Collection, cause A5.

Enforcing quite a big overhead (as seen elsewhere in the report) for 1600 services, even though the reuse-requirement only applied to 100 (= 6%) is obviously not a well-designed business case. It later turned out that:

The ambitious SOA requirements were not really the customer’s needs, but an idealistic concept enforced by the customer’s consultant’s IT architect. It took a long time to replace these ideals with something pragmatic, and it increased cost and time.

So instead of designing the work to “most complicated denominator”, handle the anomalies as the anomalies they are, and keep everything simple and adopt a process optimized for the default case of 1-to-1 couplings between services — or not split out to services at all. Or at least not have them in different repositories, if they should or could always be kept in sync. A way to do this, is to make cross cutting changes simple, which is the case in corpulent-repo.

I see the primary advantages of Mono/corpulent-repo being:

- Induce more shared code ownership

- Easier to do cross functional changes

- Easier to figure out what to change, (by seeing commits for similar previous changes)

- Easier to do full stack testing

- Atomic changes for crosscutting changes

- Easy dependency management (self-compatible)

- Documentation through Git history — also for crosscutting changes

- Less branch and “up-to-date” confusion.

There is of course the risk of people coupling components or code together, where it is not necessary or is not desirable. This is something teams should keep an eye on in reviews.

However, I think the risk of this is exaggerated and in any case I do not believe “repository strategies” is in the Top 3 over best counter measures against this issue. I would also expect more time to be available for these countermeasures and reviews, as much less time is spent worrying about keeping several repositories in “same” branch, all of them up-to-date… And cleaning up after or figuring out one or more repositories not being in the expected state because of wrong branch or missing pull…

A few principles

Lastly, I would like to flesh out a few inherent principles related to the TBD w. NBR methodology described.

Many of the choices where we feel the voice in our head go:

…as a professional modern developer, I ought to….

or when we feel the judgement from others hover above our heads, we should stop and think. These moments are often related to whether to put in extra effort or cost to achieve something. Often something a bit, esoteric or not very specific long term gain, that we may not be in need of yet. E.g. independent deploy schedules for services, scalability of organization, scalability of system or quality inducing processes.

The added effort or cost should only be invested if we are in fact in need of it. If you have a simple back office system with 10 users, then please don’t focus on horizontal scaling or use it as an argument for docker+openshift. Uptime may also not be important in that case.

Only pay, in effort or cost, for what you are actually in need of. And you are going to use.

I know this appears to be self-evident. However, I have experienced surprisingly (and shockingly) often that is not the case… (most of my previous pieces are about this. And yes. It is one of my pet peeves)

Not convinced by the above description of TBD w NBR and the reasoning provided to jump on the bandwagon? Then let’s jump into why I think purist feature branches & pull requests (FB&PR) is, if not bad practice, at least introduce a lot of counterproductive process and impede software delivery performance. Impediments you would do better without.

Next article in this series: How feature branches and pull requests work against best practice