Riding on a beam of light

Did you ever wonder what it would be like to ride on a beam of light? Albert Einstein did.

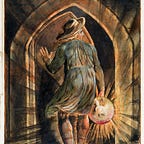

He tried to picture what it would be like to travel so fast that you caught up with a light beam. From this thought Einstein was able to visualize that if you sent a light signal from clocks, the instant they struck the hour, a person traveling superfast toward one of the clocks would have a different view of whether they were in sync than someone traveling superfast in the other direction. So while much of what Dr. Einstein once imagined has been proven, it’s that kind of imagination that caused the poet William Blake to write, “What is now proved was once only imagined.”

A Blake scholar, mathematician and physicist, Jacob Bronowski has written “To imagine is the characteristic act, not of the poet’s mind, or the painter’s mind, or the scientist’s, but of the mind of man.” Artificial Intelligence (AI) technology is being used to extend the mind of man. It is being used to wonder what it would be like to ride on a beam of light.

Sadly, even though we have all these encouraging words from great scientists and artists we still get commentary like the following when faced with emerging artificial intelligence (AI) and robotic technology:

- “robots are likely to take 45% of all the jobs over the next few decades.

- ”Robots will eliminate 6% of all US jobs by 2021,

- report says Robots Are Stealing American Jobs, According to MIT Economist

- Rise of the Robots: Technology and the Threat of a Jobless Future

I’ve often asked why not support humans with computers instead of substituting computers for humans? Ever since Adam Smith, the answer has been clear, at least for the thousands who graduate from the MBA programs of Colleges and Universities throughout the developed world. These future leaders of business are drilled with the idea that automating human work with machines lowers unit labor costs and therefore is not only good business but also a competitive necessity because firms that do not automate will be beaten by competitors that do.

Young business managers, trying to get ahead, are obsessed with Operating Income which equals sales minus expenses, of which human labor is more often than not the largest expense. Therefore “here come the robots“.

But now in an article entitled “des.ai.gn — Augmenting human creativity with artificial intelligence”, Norman Di Palo offers an alternative to the conventional wisdom of economic doom and gloom when he describes how AI can support human creativity and not just be a substitute for it. Finally! Somebody crazy enough to answer the question I’ve been asking for years.

Instead of viewing artificial intelligence as a threat, Di Palo sees it as an opportunity. He’s working “to revolutionize the way designers work with artificial intelligence“. Here’s what he did:

- created a small dataset of chairs and sofas from the internet (around 500 samples).

- implemented a neural network architecture that could extract visual features from the unlabeled data, The objective was to not just to understand what a chair looks like but to understand “what it means to be a chair” and search for images that have the same “meaning”.

- Reconstructed the original image as closely as possible.

- Projecting all the dataset in the latent space which allowed him to compute distances between samples and thus do “reverse image search”.

According to Prasoon Goyal, PhD student in AI at UT Austin, in the case of “latent space”, “latent” means “hidden”. It is pretty much used that way in machine learning — you observe some data which is in the space that is observable, and map it to a latent space where similar data points are closer together, thus defining “what it means to be a chair“. Prasoon uses the following 4 images as examples:

In the pixel space that you observe, there’s no immediate similarity between any two images. However, if you were to map it to a latent space, you would find the images on the left to be closer to each other in the latent space than to any of the images on the right. So your latent space captures the structure of your data. In a Latent Dirichlet Allocation (LDA), you model the task in a way that images belonging to similar images are closer in their latent space.

You want to map images to a latent vector space such that images with similar “meaning”, based on their data representations, are closer in that space.

Latent, or hidden, variables differ from observed variables in that they are not measured directly. Instead we use observed variables and mathematically infer the existence and relationship of latent variables. While we can’t measure latent variables directly, we can measure them indirectly by using observed variables. It’s similar to the technique for finding planets orbiting distant stars. The exoplanets aren’t directly seen (they are far too dim) but they can be observed indirectly by both the gravitation wobble they have on their parent star and the small amount of light they block out of view as they pass between their star and our telescopes.

According to Di Palo having a reference design makes the process of finding inspiration for new designs easier. Another interesting application is mixing different samples from the dataset to generate new ones that share visual features from both. In particular, it’s possible to mix the design of different chairs to obtain a new chair that is aesthetically coherent and shares design details with both chairs. This technique allows a designer to immediately experiment with new ideas by mixing interesting designs, until some result sparks his/her creativity. This is possible by averaging the latent space representation of a different chair design. A weighted average can even give access to a great number of nuances, like creating a new design that is closer to one or another “parent”. The adjacent picture shows some interesting results presented by Di Palo.

Di Palo applied the same techniques to fashion design, in particular textile design, with similar results. He developed further this branch of the project and created a neural reverse image search that works with simple drawings, made on a web app. In the demo, a user can simply sketch a pattern as simply as the crossed lines below, that he/she would like to find in a database of textile patterns. The neural network extracts visual features from the sketch and compares them with ones from the database images. After some computation, it returns the ones that seem to match the most.

DI Palo’s work can be extended to another level like forensic science. Think of the fictional character in the television show Bones, Angela Montenegro-Hodgins, portrayed by Michaela Conlin. In the show Conlin portrays a classically trained artist working on forensic reconstructions.

Researchers at the University of Twente, in the Netherlands, use machine learning techniques for person recognition based on biometric traits, like face recognition, finger print recognition etc., mostly on image data. They’ve developed a real world reconstruction method for the more difficult “side-view” face recognition based on camera images. The method is used to recognize people as they pass through a door, and then “estimate” their location in a building. According to the researchers the method yields a better score in ninety percent of the examined cases, and helps forensic investigators with their daily work.

Unlike other 3D reconstruction methods, the new method does not use prior knowledge of facial features in the form of a facial model and is therefore not “biased,” which is of vital importance to forensic facial comparisons. With this method, a 3D facial model and a frontal profile are reconstructed on the basis of images of faces in different poses.

Artificial intelligence is also changing plastic surgery. With the ubiquity of smartphones, image data is more abundant than ever. Computers are often better than humans at identifying patterns, which makes them particularly strong allies in highly visual fields such as plastic and reconstructive surgery, where they can detect, diagnose, monitor, and assess patient outcomes. AI can significantly enhance all of these procedures, in part because images and videos are already part of the plastic surgeon’s craft. They’re how plastic surgeons communicate complex problems to their colleagues, explain surgery to patients, and monitor the outcomes of operations.

In Burn Care AI can not only accurately assess the total surface area of a burn — essential for proper treatment — it can also predict whether a burn wound will heal without surgery. In one study, researchers used reflectance spectrometry and an artificial neural network to predict if a burn wound would take more or less than 14 days to heal.

Craniofacial surgery involves moving the bones, muscles, and skin of the skull. Some infants are born with a congenital condition known as craniosynostosis, a disorder in bone growth caused by a premature fusing of the skull, which creates an abnormal appearance and increased pressure on the brain and which can delay growth. Babies as young as two months old may undergo major craniofacial surgery to re-shape their skull, and detecting the condition sooner is better. Plastic surgeons often use pictures of babies’ heads and CT scans to examine their skull shape and plan surgeries. Researchers recently trained an AI app to classify the shape of a baby’s skull in order to better catch early signs of craniosynostosis. This technology can be used to screen children and decrease the number of X-rays or CT scans a baby would need for diagnosis.

Fashion design, Forensic Reconstructions, Burn Care, Plastic Surgery, these are all areas of important work being advanced with the tools and techniques of artificial Intelligence (AI). Yet our society seems obsessed with the evils of robots. Robotic characters, androids (artificial men/women) or gynoids (artificial women), and cyborgs (also “bionic men/women”, or humans with significant mechanical enhancements) have become a staple of science fiction, I now understand, since the time of Homer, as surprising as that may be.

The first reference in Western literature to “mechanical servants” appears in Homer’s Iliad. In Book XVIII, Hephaestus, god of fire, creates new armor for the hero Achilles, assisted by robots. According to the Rieu translation, “Golden maidservants hastened to help their master. They looked like real women and could not only speak and use their limbs but were endowed with intelligence and trained in handwork by the immortal gods.”

Of course, the words “robot” or “android” were not used to describe them, but they were nevertheless mechanical devices, human in appearance. “The first use of the word Robot was in Karel Čapek’s play R.U.R. (Rossum’s Universal Robots) (written in 1920)”.

Star Wars’ characters, R2D2 and C3PO may have turned-around our understanding of robots a bit but we need to overcome our fear of the impending Robopocalypse and get back to wondering what it would be like to ride on a beam of light.

___________________________________________________________________

Notes:

4. https://www.popsci.com/technology/article/2010-10/robots-are-stealing-american-jobs-economists-say

6. Principles of Management Accounting ( https://accountlearning.com/principles-management-accounting/ )

7. des.ai.gn — Augmenting human creativity with artificial intelligence

8. https://research.utwente.nl/en/publications/side-view-face-recognition-2

9. https://www.quora.com/What-is-the-meaning-of-latent-space

10. https://www.sciencedaily.com/releases/2016/01/160121100957.htm, University of Twente, January 21, 2016

11. https://venturebeat.com/2017/10/27/artificial-intelligence-is-changing-the-face-of-plastic-surgery/